Part 1: Tool-native AI and enterprise integration.

For decades, CIMdata has tracked Artificial Intelligence’s (AI) role in PLM, particularly within various computer-aided technologies like CAD and CAE. More recently, and especially with the advent of generative AI and now Agentic AI, the proposed uses and expected applications have exploded.

CIMdata recognized AI’s greater potential back in 2019, when we introduced discussions related to AI’s role in augmenting human intelligence in support of the product lifecycle and its various enabling technologies. And in 2024, we further recognized its role by making ‘augmented intelligence’ one of CIMdata’s Critical Dozen—CIMdata’s twelve enabling elements of a digital transformation. Our emphasis then, and still today, is that AI enhances human decision-making, rather than attempting to replace it. That distinction matters now more than ever.

As solution providers accelerate AI capabilities across product lifecycle management (PLM) solutions, the conversation has shifted from whether to adopt AI to how to adopt it effectively. What we’re seeing is companies jumping to advanced capabilities without understanding the prerequisites, with costly results. Additionally, the gap between solution provider roadmaps and organizational readiness is leading to expensive failures.

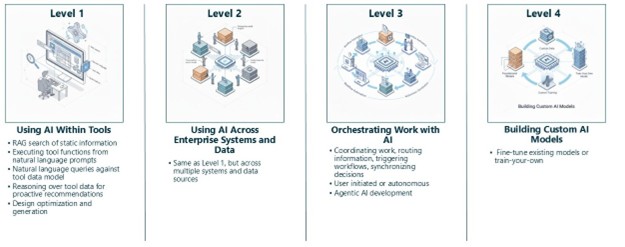

In this two-part article, CIMdata introduces a Four-Level Framework for AI in PLM (Figure 1), developed as a practical tool to navigate this gap and help companies move from basic use to strategic advantage. Each level has distinct prerequisites and capabilities. Understanding these levels will help you make realistic decisions about where to invest and what success requires.

Part 1 covers the foundational levels: using AI within individual tools (Level 1) and extending AI across your enterprise systems (Level 2). Part 2 will tackle orchestrating work with autonomous AI agents (Level 3) and building custom AI models (Level 4), complete with realistic timelines through 2027 and actionable strategic guidance for navigating this transformation.

One clarification: this article addresses the full spectrum of AI in PLM.

This includes traditional machine learning (ML), such as physics-informed neural networks, AI surrogate models for accelerating simulation, predictive maintenance algorithms, and computer vision for quality inspection. It also encompasses AI-assisted topology optimization and generative design, as well as generative AI, including large language models (LLMs) that enable conversational interfaces. While our examples focus primarily on LLM-based AI, since it’s driving current investment decisions, the framework applies equally to all AI types, whether conversational or computational. The prerequisites remain the same: clean data, integrated systems, skilled teams, and governance frameworks.

The Framework: Four Levels of AI Maturity

AI maturity in PLM can be understood through four levels, each with distinct capabilities, prerequisites, and organizational requirements.

Level 1: AI built into individual tools (e.g., CAD, PDM, and simulation). You ask AI for answers using data from that single system. Prerequisites are minimal: tool adoption, basic prompt literacy, and output validation.

Level 2: AI accesses information across your entire enterprise, which is connected through a digital thread. AI synthesizes information from PLM, ERP, manufacturing, procurement, quality, and other enterprise databases. Prerequisites are substantial: digital thread infrastructure, data governance, and integration expertise.

Level 3: Introduces AI agents. Instead of just answering questions like the AI assistants from Levels 1 and 2 do, AI agents monitor systems, detect events, and execute multi-step workflows with varying levels of autonomy. Prerequisites are extensive: everything from Level 2 plus data governance frameworks, years of operational experience, and cultural acceptance of AI acting without human intervention.

Level 4: Organizations build custom AI models for competitive advantage or specialized requirements. This can happen alongside any other level. It requires AI/ML expertise, data science capabilities, compute power, and clear justification.

Most organizations progress sequentially because each level builds capabilities for the next. However, organizations with existing capabilities can start at higher levels. What matters is having properly enabled the prerequisites for your target level.

Level 1: Tool-Native AI-Single-tool AI that answers your questions.

At Level 1, you’re working with AI assistants that solution providers have integrated directly into their solutions. The pattern is straightforward: you ask a question, and the AI searches data that is stored, indexed, and managed within that specific tool to compose an answer. This represents the simplest form of AI adoption; you purchase the solution and start using the AI.

Level 1 capabilities include:

- Conversational search where engineers ask questions in plain language and get synthesized answers. Tools like Siemens Teamcenter Copilot (their PLM-specific assistant, not to be confused with Siemens Industrial Copilot that will be discussed in Part 2), AnsysGPT, and PTC Creo+ AI Assistant are examples of solutions with this capability.

- Natural language command discovery where engineers ask, “How do I create a circular pattern?” and AI suggests the relevant commands and menu locations, eliminating the need to search through documentation or complex menu structures.

- Natural language queries against tool data, where “show me all fasteners with corrosion resistance rating greater than eight used in marine applications” returns relevant results.

- AI-driven design optimization where generative design algorithms explore design alternatives based on constraints and requirements. For example, Autodesk Fusion 360’s generative design creates optimized geometries from performance requirements and boundary conditions. For specialized domains like ship design or aerospace structures, some solution providers build custom models (Level 4 capabilities) and embed these into their tools, making domain-specific AI acceleration available to Level 1 users without requiring them to develop custom models themselves.

- Proactive recommendations based on patterns in tool data, like flagging “this part is used in 12 assemblies.” Example: Siemens NX’s Design for Manufacture Advisor analyzes geometry and identifies manufacturing challenges.

Prerequisites are minimal, which is why Level 1 adoption is spreading quickly. Teams need basic training, prompt literacy through practice, and the ability to validate outputs—plan for 3 to 6 months of proper adoption and skill development.

The boundaries of Level 1 matter. At this level, reasoning is constrained to what’s in the solution. AI can’t access information from other systems or predict impacts across the enterprise. When your engineers say, “This would be better if it knew about supplier capabilities” or “I need cost data from ERP,” you’re ready for Level 2.

By the end of 2026, Level 1 capabilities are expected to be near-universal. Organizations without solution-native AI capabilities will be at a disadvantage. This is becoming table stakes, no longer a competitive advantage.

Level 2: AI Across Enterprise Systems

At Level 2, AI extends beyond individual solutions to access information across an enterprise. The interaction pattern remains the same (the user asks, AI answers), but now AI synthesizes data from multiple connected solution domains (e.g., PLM, ERP, manufacturing, procurement, and quality). This integration enables more comprehensive responses, supporting better-informed engineering decisions and cross-functional collaboration.

Here’s why this matters. An engineer asks, “What’s the impact if I switch this fastener from steel to aluminum?” With Level 1, the PLM solution responds based only on its data—”This part is used in 12 assemblies.” With Level 2, AI reasons across systems, “This part is used in 12 assemblies (i.e., a PLM where-used). Material cost drops from $2.40 to $0.65 (ERP), but supplier MOQ increases from 100 to 1000 units (procurement). Current inventory shows 450 steel fasteners (inventory management). Lead time for aluminum is 8 weeks vs 2 weeks for steel (supply chain). Note: Engineering flagged potential galvanic corrosion issues mixing aluminum fasteners with steel assemblies in marine environments (shared drive). Recommend review before proceeding.” The engineer reviews this synthesis and makes a decision.

This is “buy with some build and maintain” AI adoption. You’ll buy enterprise solutions with integration capabilities, but you’ll need to build the infrastructure connecting them into a digital thread and implement AI capabilities that query across this infrastructure. You also need to maintain the data quality that makes it work.

Several solution providers offer capabilities spanning multiple solution domains, though these generally work within a single solution provider’s ecosystem. Oracle AI for Fusion and SAP Joule, for example, offer capabilities across their integrated suites. However, when integrating across multiple solution providers, which most organizations need, you must build your own integration infrastructure. Platforms like DataBricks, Snowflake, or similar data lakehouse solutions can serve as the integration layer, but implementing and maintaining these requires significant internal expertise and ongoing effort.

Level 2 demands substantial investment, separating leaders from followers. The data foundation takes time. You need clean, validated data with consistent metadata across PLM, ERP, MES, quality, and supply chain solutions. The digital thread must actually work, preserving cross-solution relationships and synchronizing data in real-time. Plan for an 18- to 24-month minimum pilot and implementation timeline, realistically 24- to 36-months given typical overruns.

On the technical side, you need deep integration with robust APIs. Retrieval Augmented Generation (RAG) is becoming standard practice, connecting AI models to your actual enterprise data. However, effective RAG requires properly indexed data and specialized skills. If your data isn’t clean and well-indexed, RAG can’t retrieve the right information regardless of which AI solution provider you choose.

The organizational challenges often exceed technical ones. You need data governance frameworks, integration expertise across teams, and serious change management. MIT Center for Information Systems Research’s 2021 research found that while organizations are rolling out data literacy programs (i.e., training employees to read, interpret, and use data), training alone is insufficient—organizations must ensure people actually engage with and experience data to build true capability. Cultural resistance is real, and organizational change management (OCM) is critical to success.

When evaluating solution providers, ask directly, “How open is your platform to third-party AI? What APIs enable digital thread integration?” Integrated suites like Oracle Fusion or SAP have advantages within their ecosystems. Open platforms like Aras Innovator, Siemens Teamcenter, PTC Windchill, and others enable third-party AI integration and flexibility.

Level 1 becomes universal quickly because solution providers provide features. Level 2 requires sustained investment in digital thread infrastructure and data governance. Success depends on execution discipline, not buying software.

Organizations progress to Level 3 when Level 2 operates reliably, data governance is established and followed consistently, and the organization is ready to delegate decision-making to AI within defined boundaries. This typically requires years beyond Level 2.

Understanding AI maturity levels helps you make realistic decisions about where to invest and what success requires. Level 1 provides immediate value with minimal prerequisites and is expected to become universal by the end of 2026. Level 2 delivers a competitive advantage, but, as previously stated, it demands substantial investment in digital thread infrastructure and data governance.

Organizations investing in Level 1 now, while building Level 2 foundation work in parallel, are positioning themselves well. The question isn’t whether your organization will adopt these capabilities. The question is whether you’ll understand what’s needed to succeed, including what and how fast you should adopt.

In Part 2, we’ll explore Level 3 (orchestrating work with AI) and Level 4 (building custom models), provide realistic adoption timelines through 2027, and offer strategic guidance based on what’s actually happening in manufacturing companies implementing AI-enabled PLM.